BLACK FRIDAY

85% Discount for all November

85% Discount for all November

Software Development

Technology

Computer Science

Hello everyone, welcome to my Docker series. In this series, I’m going to give you a comprehensive detail and empower you with the skills that you need to get started with using this amazing technology. This is the first section of the series that contains the nitty gritty details of what docker is and why you should start using it.

Docker is a platform for building, running, and shipping applications in a consistent manner. If your application works on your development machine, it can run and function in the same way on other machines. If you have been developing software for while, you might have probably came across a situation where your application works on your development machine but doesn’t work somewhere else. Some of the reasons why this happens are listed below.

This can happen when:

● One or more file is missing as part of your deployment.

● This can also happen if the target machine is running a different version of the software(software version mismatch).

● This can also happen if the configuration settings are different across the machines.

This is where Docker comes to the rescue. With docker, we can easily package up our application with everything it needs and run it anywhere on any machine with Docker. If someone joins your team, they don’t have to spend half of the time setting up a new machine to run your application, they don’t have to install and configure all the dependencies your application is using. All they need to do is to tell Docker to bring up your application “docker-compose up” and Docker itself will automatically download and run the dependencies the application is using inside an isolated environment called a container, and this is the beauty of Docker.

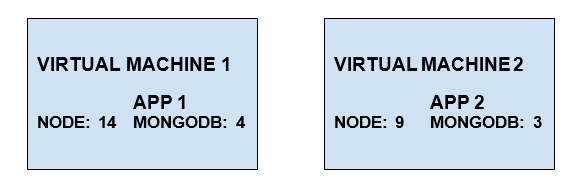

This isolated environment allows multiple applications to use a different version of some software side by side, So an application can use Node version 14, another application can use Node version 9, both these applications can run side by side on the same machine without messing up with each other. So, this is how docker allows us to consistently run applications on different machines. When we are done with a particular application and don’t want to work on it anymore we can remove the application and its dependencies in one go.

Without Docker, as we work on a different project, our development machine get bloated with so many library and tools that are used by the different application and then after a while we don’t know if we can remove one or more of these tools because we are always afraid that we will mess up with some application.

With Docker, we don’t have to worry about this, because each application runs with its dependencies inside an isolated environment, we can save and remove an application with all its dependencies to clean up our machine. So in a nutshell, Docker helps us to consistently build, run and ship applications.

A virtual machine is an abstraction of a machine or physical hardware. So we can run several virtual machines on a real physical machine. For example, we can have a Mac machine and run two virtual machines, one running Windows, and the other running Linux, We use a tool called a Hypervisor. In simple terms, a Hypervisor is a software used to create and manage virtual machines.

Examples of Hypervisor are:

● VirtualBox

● VMWare

● Hyper-v (Windows Only)

VirtualBox and VMWare are cross-platform, they can run on Windows, Mac Os, and Linux.

For us as software developers, we can run an application in isolation inside a virtual machine. So on the same physical machine, we can have two different virtual machines each running a completely different application and each application has the exact dependencies it needs.

All these applications are running on the same machine but in a different isolated environment. That is one of the benefits of virtual machines, but there are a number of problems with these models.

● Each virtual machine needs a full-blown OS(Operating System).

● They are slow to start because the entire OS needs to be loaded.

● Virtual machines are resource-intensive because each VM takes a slice of the actual physical hardware resources like CPU, Memory, and Disk space.

A container is an isolated environment for running an application.

● Containers give us the same kind of isolation, allowing us to run multiple applications in isolation. ● Containers are lightweight they don’t need a full operating system. ● All containers on a single machine share the OS of the host. ● Containers are very fast, because the operating system has already started on the host, a container can start up very quickly. ● Needs fewer hardware resources.

So these are the difference between containers and virtual machines.

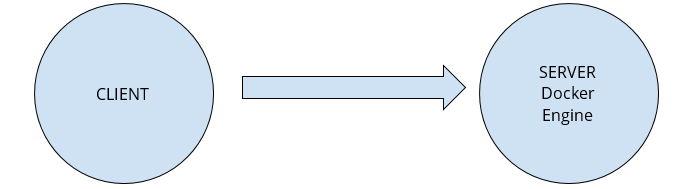

Docker uses the client-server architecture; it has a client component talking to the server component using a RESTful API.

The server also called the DOCKER ENGINE sites in the background and takes care of building and running Docker containers. Technically, a container is just a process, like any other process running on your computer, but this is a special kind of process. As I mentioned earlier, unlike virtual machines, containers don’t contain a full-blown operating system, instead, all containers on a host share the operating system of the host, more accurately all these containers share the kernel of the host. A kernel is the core of an operating system, it’s like the engine of care, it’s the part that manages all applications and hardware resources like memory and CPU.

Every operating system has its kernel or engine and these kernels have different APIs, that is why we cannot run a Windows application on Linux, because under the hood this application needs to talks to the kernel of the underlying operating system. So that means on a Linux machine, we can only run Linux containers because the containers need Linux. On a Windows machine, however, we can run both Windows and Linux containers because Windows 10 is now shipped with a custom build of Linux kernel, this is in addition to the Windows kernel that has always been in windows it’s not a replacement.

So with this Linux kernel, now we can run Linux applications natively on Windows, so on Windows, we can run both Linux and Windows containers. Our Windows container shares the Windows kernel and our Linux container shares the Linux kernel. Mac OS has its kernel which is different from Linux and Windows kernel and this kernel does not have native support for the containerized applications. So docker on Mac uses a lightweight Linux VM to run Linux containers.

To get started using docker, navigate to this link and install the recommended docker for your machine. Before proceeding to install docker, please do ensure to read the system requirement for you to run docker on your machine.

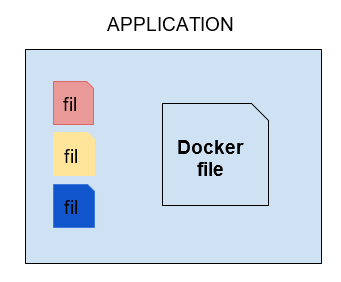

Now let's talk about your development workflow when using docker. So to start we take an application it doesn’t matter what type of application it is or how it is built, we take that application and dockerize it, which means we make a small change so that it can be run by docker, how? We just add a docker file to it. A Docker file is just a plain text file that includes instructions docker uses to package our application into an image. This image contains every our application needs to run, such as:

● A cut-down operating system(OS)

● A runtime environment like (e.g Node or Python)

● Application files

● Third party-library

● Environment variables etc.

So we create a docker file and give it to docker for packaging our application into an image. Once we have an image, we tell Docker to start a container using that image. So a container as I mentioned earlier is just a process, but it’s a special kind of process because it has its file system which is provided by the image.

So our application gets loaded inside a container or a process, and this is how we run our application locally on our development machine. So instead of directly launching the application and running it inside a typical process, we tell docker to run it inside a container in an isolated environment.

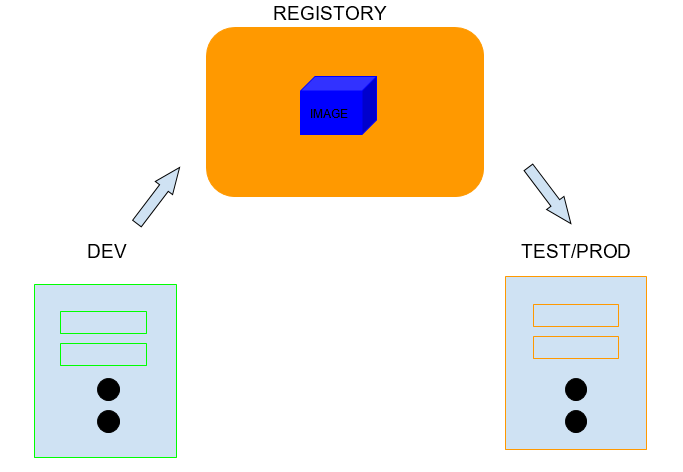

Now here is the beauty of docker. Once we have the image ready, we push it to a docker registry like the Docker hub. Docker hub to docker is like GitHub to Git, it’s storage for docker images that anyone can use. So once our application image is on Docker hub, then we can out on any machine running Docker.

This machine has the same image running on our development machine which contains a specific version of our application with everything it needs, so we can start our application the same way we start it on our development machine, we just tell docker to start the container using the given image.

So with Docker, we no longer need to maintain long complex released documents that have to be precisely followed, all the instructions for building an image of an application are written in a docker file, with that we can package up our application into an image and run it virtually anywhere. This is the beauty of Docker.

I hope this first section of the docker series helped you in getting the basic understanding of what docker is. In the next section of this series, we will get our hands dirty with docker by creating our first docker file.

You can follow me for more interesting topics and drop a like if you found this content valuable and informative.

Goodbye, have a nice day!

Saturday, Sep 18, 2021